Artificial Intelligence Objectives

Objective 1

1. Create and integrate cognitive artificial intelligence into applications.

This chatbot demonstrates the integration of cognitive AI principles into a practical C++ application through its modular architecture and intelligent response system. The application implements multiple AI personalities (FriendlyBot and SmartBot) that exhibit different cognitive behaviors and decision-making patterns, showcasing how AI can be embedded into software systems. The chatbot processes user input through keyword analysis, maintains conversational context, and generates contextually appropriate responses based on user sentiment and interaction history. The polymorphic design allows for easy extension of AI capabilities, while the conversation management system demonstrates how cognitive AI can maintain state and learn from user interactions over time through persistent data storage and statistical analysis.

Multi-Personality Maritime AI Chatbot

This AI chatbot demonstrates successful integration of cognitive artificial intelligence into a practical desktop application. The chatbot uses the llama-cpp-python library to integrate the Dolphin3.0-Llama3.1-8B language model directly into a Python Tkinter GUI interface. The cognitive AI component is showcased through the chatbot's sophisticated personality system, where the same underlying AI model adapts its responses, vocabulary, and knowledge focus based on the selected maritime persona (Old Salt, Pirate Captain, Navy Officer, or Fisherman). This demonstrates cognitive flexibility. The chatbot doesn't just give you pre-written answers, it creates responses on the fly that fit what's being discussed and sound like the specific character. The chatbot's integration ensures seamless real-time processing, where it processes user inputs and provides intelligent, personality-driven responses that show understanding of both the user's query and the chosen maritime context. This represents a complete end-to-end AI application rather than just a theoretical implementation.

Objective 2

2. Create and evolve natural language processing systems.

The chatbot implements a foundational natural language processing system that can parse, understand, and respond to human language input in a meaningful way. The NLP capabilities include keyword extraction and categorization (greeting, farewell, emotional states, questions), sentiment analysis through keyword matching, and dynamic response generation based on conversational context. The system demonstrates evolution through its extensible architecture, where new language patterns and responses can be easily added to the keyword maps and response databases. The conversation history tracking and word counting functionality show how NLP systems can analyze linguistic patterns over time, while the multi-threaded response processing demonstrates real-time language processing capabilities essential for interactive NLP applications.

The chatbot shows solid natural language processing by using a local language model (Llama) that understands user messages and responds like a real person would. The system handles natural conversation well by keeping track of what was said before through its context window of 2048 tokens, so it can have back-and-forth conversations that make sense. The chatbot also shows how NLP systems can be customized by adding personality instructions to every user input, which transforms regular questions into Texas cowboy-style responses. The code includes smart text processing features like stop tokens that prevent the AI from getting confused about who's talking, and token limits that control how long responses can be. This demonstrates practical NLP system design where you need to manage how the AI processes and generates text to work properly in real applications.

Objective 3

3. Demonstrate proficiency in designing and developing machine learning systems using industry approaches and patterns.

CSC338: Final Project: End-to-end implementation of a Data Science Project using behavioral dataset

This project demonstrates proficiency in designing and developing machine learning systems using industry-standard approaches and patterns. It follows an end-to-end data science workflow, including data preprocessing, exploratory data analysis, feature engineering, model training, and evaluation. By using techniques such as label encoding, correlation analysis, and cross-validation, and implementing models like Random Forest and Logistic Regression, the project adheres to best practices seen in professional machine learning pipelines. The modular and reproducible structure of the notebook aligns with scalable industry solutions.

Assignment 8.1 : Machine Learning Model Implementation - Classification Models

Assignment 8.1 Machine Learning Model Implementation Classification Models Word Doc

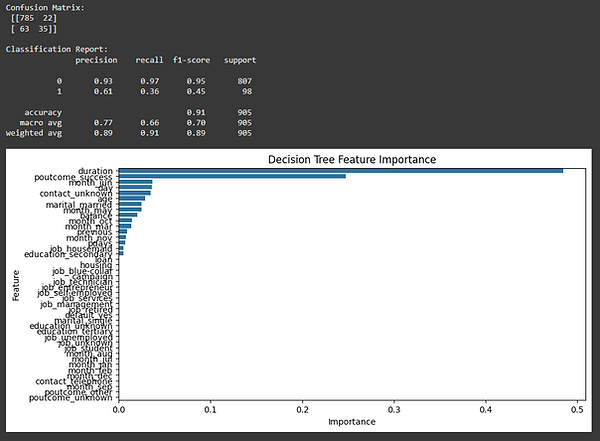

This work demonstrates proficiency in designing and developing machine learning systems by applying industry-standard approaches such as implementing and comparing multiple classification algorithms (KNN, logistic regression, and decision trees). It shows a thoughtful process of hyperparameter tuning (adjusting k in KNN), evaluating model performance using precision, recall, and ROC curves, and analyzing feature importance to interpret model behavior. The ability to identify strengths and weaknesses of each model and select the most appropriate one based on business needs reflects a practical, industry-aligned approach to building and refining machine learning solutions.

Objective 4

4. Design and employ deep learning systems using best practices and conventions.

Multi-Personality Maritime AI Chatbot

The maritime AI chatbot demonstrates the employment of deep learning systems through its integration with the Llama 3.1 8B model, which represents a state-of-the-art transformer-based large language model built on deep learning architectures. The implementation follows best practices by properly configuring the model with appropriate parameters such as context window size (n_ctx=2048), thread optimization (n_threads=8), and token limits (max_tokens=1024). The code employs conventional deep learning deployment patterns including model loading, inference management, and resource optimization. The use of a quantized model (Q5_K_M format) demonstrates understanding of model compression techniques commonly used in production deep learning systems. Additionally, the implementation includes proper memory management and cleanup procedures, which are essential conventions when working with large deep learning models in resource-constrained environments.

This AI chatbot demonstrates the employment of deep learning systems through its integration of the Llama language model, which is built on the transformer architecture (a state-of-the-art deep learning framework). The implementation follows best practices by using the llama-cpp library, which provides an optimized inference engine specifically designed for large language models. The code demonstrates proper resource management conventions including explicit cleanup of the LLM instance after use, appropriate context window sizing, and multi-threading configuration for efficient processing. The system employs industry-standard practices for model inference by using quantized models to balance performance and resource usage, implementing proper stopping criteria to control generation, and using appropriate token limits to prevent runaway generation.

Objective 5

5. Demonstrate a deep understanding of neural networks and their role in creating intelligent systems.

The chatbot showcases a good understanding of how neural networks function in intelligent systems through its implementation of a conversational AI that leverages the multi-layered attention mechanisms inherent in transformer-based language models. The code demonstrates understanding of neural network behavior by implementing personality prompting ("You are a wise and knowledgeable Bible study leader..."), which works by guiding the neural network's activation patterns to produce contextually appropriate responses. The system shows comprehension of how neural networks process sequential information by maintaining conversational context and providing coherent, domain-specific responses. Furthermore, the implementation reveals understanding of neural network optimization through the use of quantized models and efficient inference parameters, recognizing that these networks require careful tuning of hyperparameters like context length and thread allocation to function effectively as the "brain" of an intelligent conversational system that can understand, process, and generate human-like responses in real-time.

The chatbot demonstrates practical understanding of neural networks by implementing and configuring a transformer-based language model (Llama 3.1 8B) that uses deep neural network architectures to process and generate text. The code shows knowledge of how neural networks function in intelligent systems through its parameter configuration , setting the context window shows understanding of how neural networks handle sequential data and memory limitations, while the thread allocation demonstrates awareness of how neural network computations can be optimized for hardware. The implementation reveals understanding of neural network behavior through its use of sampling parameters and stop tokens, which control how the network generates predictions and when to halt output generation. By successfully integrating a quantized model, the project shows practical knowledge of neural network optimization techniques used to make large models run efficiently on consumer hardware. The chatbot's ability to maintain consistent personality and context throughout conversations demonstrates understanding of how transformer neural networks use attention mechanisms and learned representations to create coherent, intelligent responses in real-world applications.

Objective 6

6. Design, create, or integrate reinforcement learning, large language models, and genetic algorithms in intelligent software.

Multi-Personality Maritime AI Chatbot

This chatbot clearly demonstrates working with large language models in real software. The chatbot uses a powerful 8-billion parameter AI model called Dolphin3.0-Llama3.1-8B and configures it properly to work well. The setup includes important technical details like setting the memory size (context window), using multiple computer processors for speed, and limiting how long responses can be. The most impressive part is how the chatbot uses "prompt engineering" where it automatically adds personality instructions to every user question, which makes the AI respond as different characters without having to retrain the entire model. The chatbot also controls the AI's responses using stop words and maintains smooth conversations, showing advanced understanding of how to work with large language models in practical applications.

This project directly shows how to integrate large language models into working software by implementing the Llama 3.1 8B model using the llama_cpp library. The chatbot demonstrates practical LLM integration by setting up important model settings like how much conversation history to remember, how many CPU cores to use for processing, and how to control response generation. The software design shows good practices for putting LLMs into desktop apps, including proper memory management and connecting the AI to a user-friendly interface. The system uses prompt engineering techniques by automatically adding personality instructions to user messages, which guides how the LLM behaves and responds. This shows how to effectively use large language models for specialized chatbot applications. The code also includes cleanup procedures that properly shut down the AI model, demonstrating understanding of resource management when working with large AI systems in real software.